Artificial intelligence may seem intangible, but it relies on infrastructure and data centers that consume enormous amounts of energy, water, metals, and other resources. What do we know today about its impact on the environment? Let’s take stock.

Good news! While some pretty wild claims have been floating around in recent years about the true environmental footprint of generative artificial intelligence (AI), Google released the results of its calculations in August 20251. The tech giant reveals that, on average, a query on its Gemini applications represents 0.03 g of CO₂e and 0.26 ml of water (5 drops). Rather reassuring, isn’t it?

Not so fast, because Google’s figures are much lower than those of another generative AI, France’s Mistral AI. The latter carried out the same type of exercise last June and revealed that the impact of an average query (generation of a page of text) on its Mistral Large 2 model emitted 1.14 g of CO₂e and consumed 45 ml of water2… 40 and 170 times more than Gemini, respectively.

In search of reliable and comparable data

Why such a difference? It seems that the two companies did not use the same methods! Google used a rather soft calculation for its environmental image, for example by opting for an advantageous average query, omitting to include in its calculations the water needed to produce energy, and controversially including certain carbon credits and green electricity.

Mistral AI, on the other hand, carried out an analysis reflecting the computing power required to develop and use its model, including the impacts associated with server manufacturing, and not just energy consumption. The result is therefore closer to reality.

And what about ChatGPT? According to Sam Altman, CEO of Open AI, an average query would consume 0.34 Wh of energy, or “roughly what an oven would use in a little over a second, or what a high-efficiency light bulb would use in a few minutes. It also consumes about 0.000085 gallons of water [0.32 ml], or about one-fifteenth of a teaspoon.”3 This time, there is no mention of the carbon footprint and no information on the methodology used.

The tree that hides the forest?

While this desire on the part of tech players to inform their users about the environmental impact of their AI is rather encouraging, we must face the facts: efforts to provide reliable information are still largely insufficient.

First, the footprint of an average query says nothing about the footprint associated with generating other types of responses—what is the footprint for a Studio Ghibli image or a starter pack, for example? Or that of a video? Second, without knowing what methodology was used, it is impossible to compare the impacts of different AIs. Finally, by determining a footprint “per query,” we are placing too much responsibility on individuals and overlooking the cumulative global impacts of AI, which are the real cause for concern.

The most acute problems are the energy and raw materials needed to build data centers, power them, cool them, recycle their components, and all the harmful consequences that this flow of energy and materials has on the climate, water resources, and biodiversity. This is all the more true given that the number of data centers has been growing steadily in recent years.

A worrying acceleration in demand for computing power

The arrival of conversational agents such as ChatGPT in 2022 has led to exponential growth in the use of AI and, inevitably, an unprecedented acceleration in the construction of data centers to meet demand.

We are seeing the emergence of ever larger AI models that consume more data and require ever greater computing power.

For example, between 2018 and 2023, the size of large language models (LLMs) increased tenfold4, and on a global scale, this has many harmful consequences for the environment… which tech leaders carefully avoid talking about, preferring to tout the potential of AI to improve agriculture, climate forecasting, and biodiversity protection.

A significant underestimation

The independent consulting firm Carbone 4 closely studied the data related to the training of Llama, the AI of the giant Meta, to assess its carbon footprint. According to its calculations, if we take into account the entire scope (excluding usage), it is not 9,000 tCO2e that are associated with Llama’s training, but 19,000 tCO2e5. It would appear that the major tech players sometimes bend the accounting rules by removing the portion of their emissions linked to the source of the electricity they consume4.

Increase in the number of data centers

In 2024, there were 8,000 data centers on the planet, accounting for 1.5% (425 TWh) of global electricity demand, with AI accounting for around 10% of this demand.

According to a report by the International Energy Agency (IEA), their consumption could rise to 3% of total electricity consumption by 2030 (nearly 1,000 TWh), mainly due to the expansion of AI and cryptocurrencies, which is colossal. And in the United States, generative AI could account for 50% of data center electricity consumption.

Data centers require so much energy because they house thousands of servers that store data (text, videos, photos, etc.) and perform calculations (large-scale data processing and analysis using AI models). The calculations are based on the use of specialized computing units called GPUs (graphics processing units) capable of performing millions of operations in parallel.

AI training: a particularly energy-intensive

Data centers are also where AI training takes place. This involves “showing” models millions of examples (taken from the internet in the case of generative AI such as ChatGPT or Claude) so that they can model them and then be able to reproduce patterns and make predictions about data they have never seen before.

This energy-intensive and time-consuming step requires increasingly sophisticated GPUs and access to huge knowledge bases (the internet and social networks). The most advanced AI models use sets of thousands of GPUs running in parallel in each data center. These GPU “clusters” must be powered by electricity and consume enormous amounts of power.

A few years ago, data centers had a power capacity of a few dozen megawatts. By 2025, this will have risen to several hundred megawatts. The largest centers announced will have a power capacity of 5 gigawatts, equivalent to the output of a nuclear power plant7.

The larger these models are, the longer the training time and the greater the electricity consumption. And when this electricity does not come from low-carbon sources (photovoltaic, wind, geothermal, hydraulic, nuclear), as in the United States where it is common to use coal and gas to produce electricity, carbon emissions can quickly skyrocket.

Impact on local electricity grids

The massive demand for electricity will also have a major impact on distribution and transmission networks, which will be put under strain: overheating, voltage fluctuations that can damage equipment, and variations in voltage and frequency. Not to mention that switching to backup fuel oil power supplies, which data centers are equipped with to avoid any supply disruptions, is likely to cause cascading outages.

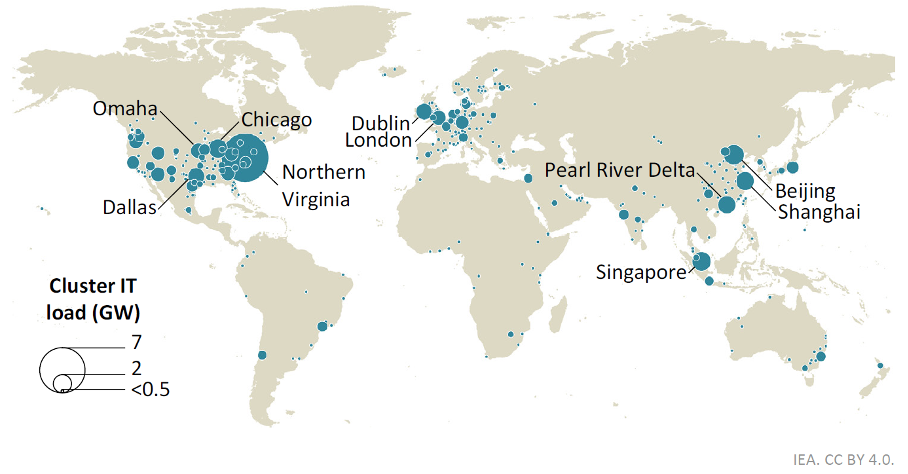

Data centers are often grouped into large clusters, which can pose challenges for local power grids.

Source: IEA report, April 20256

What independent research shows

It is possible to measure the electricity consumption of so-called “open” AI models (open weighs) related to inference. Studies6,10 show that:

- Generating text is on average 25 times more energy-intensive than classifying spam.

- Generating an image is on average 60 times more energy-intensive than generating text, or around 1 to 3 Wh

- Generating a 6-second video at 8 frames per second requires approximately 115 Wh, which is equivalent to charging two laptops.

Optimizing to reduce energy costs

To reduce the financial costs associated with powering these data centers, and incidentally their carbon footprint, tech giants are constantly seeking to optimize their power consumption through various innovations: in terms of software (use of lighter language models such as Small Language Models or SLM, quantization, pruning, distillation, Mixture of Expert MoE, etc.), system deployment (batch inference, specialized compilers), and computing hardware and infrastructure (GPUs, ultra-specialized chips, Power Usage Effectiveness PUE optimization, etc.).

Despite these optimizations, the power required to train AI models—and therefore the associated CO₂ emissions—continues to grow. According to a study conducted by Epoch AI, a multidisciplinary research institute specializing in the trajectory of AI, this power requirement is doubling every year11.

While training generally accounts for most of the emissions, the advent of generative AI and the massification of its uses has completely reversed the order of things. It seems that once there are more than 100 million users—ChatGPT now has 1 billion!—the inference phase consumes more energy than training, with around 80% of the electricity consumption of AI-dedicated data centers now being used for this purpose.

According to the IEA6, CO₂ emissions linked to data center electricity consumption are currently 180 Mt (million tons), but are expected to reach between 300 Mt (baseline scenario) and 500 Mt (scenario of mass adoption of AI) in 2035. This does not take into account emissions linked to the life cycles of data centers, IT equipment, the manufacture of submarine cables, the construction of buildings, etc.

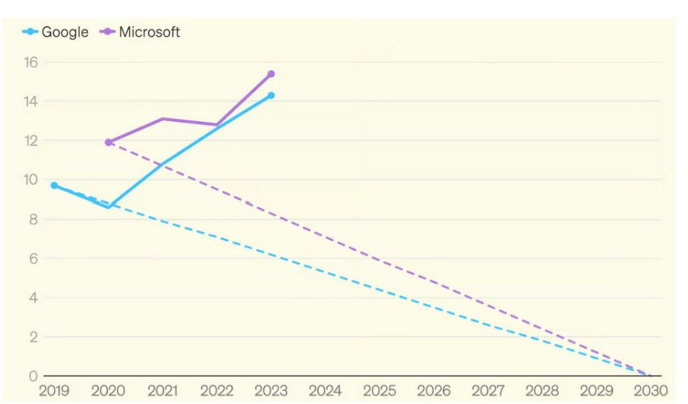

Climate targets called into question

This rush and the urgent need to power new data centers are not only leading to the postponement of coal-fired power plant closures, but also to the implementation of new gas projects. In the United States, the construction of new gas-fired power plants is expected to soon enable the production of 37 megawatts (MW) of energy12. Google has doubled its emissions since 2019 and Microsoft has increased them by 30%, putting it 50% above its stated target. Before the rush for generative AI, however, both companies were on track for decarbonization (see chart below).

Annual emissions from Microsoft and Google in millions of tons of CO₂ equivalent, compared to the average trajectory required to meet the carbon neutrality commitments made by both companies for 2030.

Source: IFP, 202413

Significant water requirements

But electricity is not only used to power data center servers, it is also used to cool them. These operate continuously and generate a great deal of heat. To prevent overheating, air cooling is commonly used. As this is particularly electricity-intensive, the largest operators now favor water cooling, such as adiabatic cooling, which involves spraying water onto surfaces through which hot air passes, cooling it on contact. According to ADEME, a data center can consume up to 5 million liters of water per day, which is equivalent to the daily water needs of a city of 30,000 inhabitants.

However, techniques are undeniably improving. Direct liquid cooling, for example, is a technique increasingly favored by tech giants to cool large GPU clusters. Less water-intensive, it involves bringing the liquid into direct contact with the components to be cooled. However, it is more expensive to implement and water recycling could be problematic due to the presence of glycol4.

The amount of water consumed and withdrawn by operators also depends on how the electricity powering data centers is generated. While hydro, nuclear, coal, and gas require large amounts of water to run turbines, solar and wind require very little.

In 2023, Google is expected to withdraw 28 billion liters of water (16 billion in 2021) for the operation of its data centers, two-thirds of which will be used for cooling14.

A problematic lack of transparency

To accurately determine the environmental impact of AI, various parameters need to be known: number of users, graphics card model, whether servers are optimized, the nature of these optimizations, type of data center, nature of the cooling system, location, carbon intensity of the local electricity grid, etc. According to a recent analysis15:

- There is no environmental information available for 84% of AI models.

- 14% do not publish anything but are open enough for external researchers to conduct energy studies.

- Only 2% are transparent models.

The issue of materials and their recycling

The manufacture of GPUs, which are used en masse in data center servers, requires not only water and energy, but also numerous minerals such as tin, tantalum, gold, and tungsten, the extraction of which is still often associated with local pollution. Furthermore, according to some researchers, the need for increasingly sophisticated chips for AI is creating new challenges, including a need for increasingly pure metals, which means higher energy consumption and greater reliance on the chemical industry16.

Finally, the proliferation of data centers poses another challenge: recycling these components. These contain particularly high levels of lead, cadmium, and mercury, toxic heavy metals that require secure recycling management. Unfortunately, today only 22% of electronic waste is recycled properly, with the rest ending up in informal landfills in southern countries, with all the risks of contamination for workers that this entails.

According to a study published in Nature at the end of 2024, AI could be responsible for an additional 5 million tons of electronic waste, on top of the 60 million tons already produced today17.

Towards “frugal AI”?

In conclusion, while AI is a powerful tool that could help solve some of the environmental challenges we face, it is clear that its rapid and exponential development runs counter to the collective interest and our climate ambitions. Only an approach combining material efficiency and questioning of uses could make sense in the current environmental context.

Frugal AI, based on necessity, sobriety, and respect for planetary boundaries, could be a key lever for reconciling innovation and sustainability. Supported by the French national strategy since 2025, it aims to reduce the ecological footprint of AI systems while maintaining their performance. The AFNOR Spec 2314 standard, the result of extensive expert work, offers concrete tools for assessing and limiting this impact18.

Sources

1. How much energy does Google’s AI use? We did the math, Google Cloud, August 2025

3. The Gentle Singularity, Sam Altman Blog, june 2025

5. L’IA Générative… du changement climatique ! Carbone4, mars 2025

7. Here’s the 5GW, 30 million sq ft data center pitch doc OpenAI showed the White House

8. Southern Company to extend life of three coal plants due to data center energy demand, February 2025

9. A utility promised to stop burning coal. Then Google and Meta came to town.

10. Power Hungry Processing: Watts Driving the Cost of AI Deployment? Luccioni 2024

11. The power required to train frontier AI models is doubling annually, 2024

12. Global Oil and Gas Plant Tracker, Global Energy Monitor, 2025

13. How to Build the Future of AI in the United States, IFP, October 2024

14. Google Environmental Report 2023

17. E-waste challenges of generative artificial intelligence, October 2024

18. IA frugale, EcoLab, mai 2025

Véronique Molénat, science writer